Shell - Make The Future (Engine Bots)

Make the Future Live 2018 is a ‘festival of bright energy ideas’ held at the Queen Elizabeth Olympic Park in London. The free four-day festival aims to explore solutions to the energy challenge, celebrate ingenuity and bold new thinking.

My brief is to create an educational game for kids that features five ‘EngineBot’ avatars displayed on a massive LED screen. Using Kinect-like motion sensing devices I should use the players body to control the rigged avatars enabling participants to catch virtual ‘fuel crystals’ as they drop into the play space.

Since Microsoft mothballed the Kinect I turn to the Orbbec Persee to provide the depth-sensing, skeleton tracking hardware. Orbbec’s advanced structured light capture technology and built-in ARM processor allow me to create a system capable of accurately tracking five skeletons simultaneously and piping the data over a web socket to a high-powered PC rendering real-time motion-controlled avatars on screen.

Skeletal Tracking

To perform the tracking I use the Nuitrack SDK to puppet a rigged biped in Unity. The requirement is for 5 bots to be controlled simultaneously. The user’s face should be visible through the bot helmet.

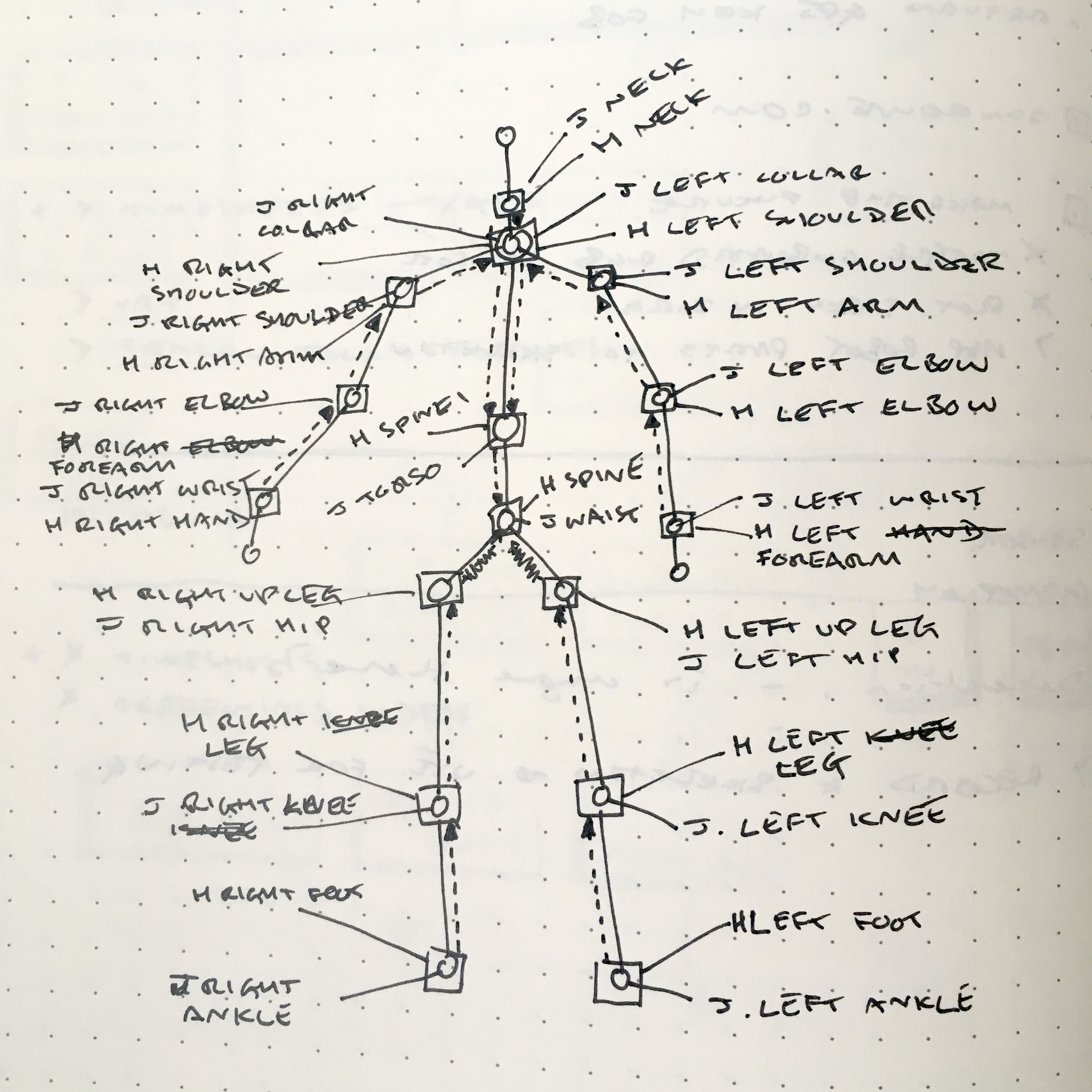

This requires mapping skeleton data provided by the Nuitrack software to bone data for a standard Unity biped rig.

I find that there is a limit of 3 skeletons that can be tracked simultaneously by the Persee - theoretically it is possible to track more but there is no way of changing the device configuration. So I decide to attempt to run three units and track 2 bodies on each (or 2-1-2 actually).This approach shows promise but throws up another problem as tracking appears to fail towards the edge of each unit’s field of view.

Knowing that the depth sensor is a structured light depth scanner that functions by projecting a pattern of infra red data into the space I theorise that overlapping areas of projection are confusing the sensor readings. There are two commonly used methods of structured light scanning: one uses a grid pattern and the other a random-ish speckle pattern. I need to know which the Persee is using.

Hoping to be able to image the IR projection I point my iPhone at the Persee projector but I see nothing - the iPhone camera filters IR light. But I remember that even though Apple started filtering IR on the back-facing camera some time ago the front-facing camera does not. I flip the phone around and this is what I manage to capture:

Evidently a speckle pattern. With the three Persees in a line I use masking tape to shutter the projector and prevent the projected patterns from overlapping. With a little tweaking I am able to successfully track 5 skeletons. In this video you can see the system select a different bot to control as someone walks across the play space. Tracking isn’t perfect - this is a tricky problem to solve - but it is just about acceptable for a fun kids game.